PULP Dronet – Nano-UAV Inspired by Insects

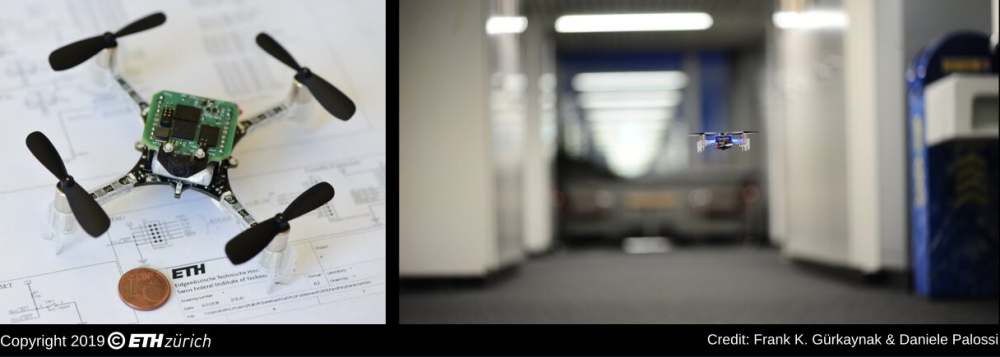

Researchers at ETH Zürich and the University of Bologna have recently created PULP Dronet, a 27-gram nano-size unmanned aerial vehicle (UAV) with a deep learning-based visual navigation engine. Their mini-drone, presented in a paper pre-published on arXiv, can run aboard an end-to-end, closed-loop visual pipeline for autonomous navigation powered by a state-of-the-art deep learning algorithm.

“It is now six years that ETH Zürich and the University of Bologna are fully engaged in a joint-effort project: the parallel ultra-low power platform (PULP),” Daniele Palossi, Francesco Conti and Prof. Luca Benini, the three researchers who carried out the study, who work at a lab led by Prof. Benini, told TechXplore via email. “Our mission is to develop an open source, highly scalable hardware and software platform to enable energy-efficient computation where the power envelope is of only a few milliwatts, such as sensor nodes for the Internet of Things and miniature robots such as nano-drones of a few tens of grams in weight.”

In large and average-size drones, the available power budget and payload enables the exploitation of high-end powerful computational devices, such as those developed by Intel, Nvidia, Qualcomm, etc. These devices are not a feasible option for miniature robots, which are limited by their size and consequent power restrictions. To overcome these limitations, the team decided to take inspiration from nature, specifically from insects.

“In nature, tiny flying animals such as insects can perform very complex tasks while consuming only a tiny amount of energy in sensing the environment and thinking,” Palossi, Conti and Benini explained. “We wanted to exploit our energy-efficient computing technology to essentially replicate this feature.”

To replicate the energy-saving mechanisms observed in insects, the researchers initially worked on integrating high-level artificial intelligence in the ultra-tiny power envelope of a nano-drone. This proved quite challenging, as they had to meet its energy constraints and stringent real-time computational requirements. The key goal of the researchers was to achieve very high-performance with very little power.

“Our visual navigation engine is composed of a hardware and a software soul,” Palossi, Conti and Benini said. “The former is embodied by the parallel, ultra-low power paradigm, and the former by a the DroNet Convolutional Neural Network (CNN), previously developed by the Robotics and Perception Group from the University of Zürich for ‘resource-unconstrained’ big drones, that we adapted to meet energy and performance requirements.”

The navigation system takes a camera frame and processes it with a state-of-the-art CNN. Subsequently, it decides how to correct the drone’s attitude so that it is positioned in the center of the current scene. The same CNN also identifies obstacles, stopping the drone if it senses an imminent threat.

“Basically, our PULP Dronet can follow a street lane (or something that resembles it, e.g. a corridor), avoiding collisions and braking in case of unexpected obstacles,” the researchers said. “The real leap provided by our system compared to past pocket-sized flying robots is that all operations necessary to achieve autonomous navigation are executed directly onboard, without any need of a human operator, nor ad-hoc infrastructure (e.g. external cameras or signals) and in particular, without any remote base station used for the computation (e.g., remote laptop).”

In a series of field experiments, the researchers demonstrated that their system is highly responsive and can prevent collisions with unexpected dynamic obstacles up to a flight speed of 1.5 m/s. They also found that their visual navigation engine is capable of fully autonomous indoor navigation on a 113m previously unseen path.

The study carried out by Palossi and his colleagues introduces an effective method that integrates an unprecedented level of intelligence in devices with very strict power constraints. This is in itself quite impressive, as enabling autonomous navigation in a pocket-size drone is extremely challenging and has rarely been achieved before.

“In contrast to a traditional embedded edge node, here, we are constrained not only by the available energy and power budget to perform the calculation, but we are also subject to a performance constraint,” the researchers explained. “In other words, if the CNN ran too slowly, the drone would not be able to react in time, preventing a collision or turning at the right moment.”

The tiny drone developed by Palossi and his colleagues could have numerous immediate applications. For instance, a swarm of PULP-Dronets could help to inspect collapsed buildings after an earthquake, reaching places that are inaccessible to human rescuers in shorter periods of times, thus without putting the lives of operators at risk.

“Every scenario where people would benefit from a small, agile, and intelligent computational node is now closer, spanning from animal protection to elderly/child assistance, inspection of crops and vineyards, exploration of dangerous areas, rescue missions and many more,” the researchers said. “We hope our research will improve the quality of life of everyone.”

According to Palossi and his colleagues, their recent study is merely a first step towards enabling truly ‘biological-level’ onboard intelligence and there are still several challenges to overcome. In their future work, they plan to address some of these challenges by improving the reliability and intelligence of the onboard navigation engine; targeting new sensors, more sophisticated capabilities and better performance-per-watt. The researchers publicly released all their code, datasets and training networks, which could also inspire other research teams to develop similar systems based on their technology.

“In the long run, our goal is to achieve results similar to what we presented here on a pico-size flying robot (a few grams in weight, with the dimension of a dragonfly),” the researchers added. “We believe that creating a strong and solid community of researchers and enthusiasts hinged on our vision will be fundamental to reach this ultimate goal. For this reason, we made all our code and hardware designs available as open-source for everyone.”

Ingen kommentarer:

Legg inn en kommentar

Merk: Bare medlemmer av denne bloggen kan legge inn en kommentar.